Not too long ago, we had an upgrade issue at a customer: the upgrade timed out, and after investigation, it was simply because our upgrade code: it took too long to modify 130000 records (well – and quite some validations, I admit, but still – it’s not that much, is it?).

This made me hold on to some things that I had been doing even for my own apps (like the waldo.BCPerftool (which I’m now reminded again that I still need to blog about that 🙈)). But before I go into that, let’s start from the beginning …

The issue

The context of the issue is simple: when you have too much data that you need to change in an upgrade codeunit, it might happen that your upgrade is timed out, which fails the upgrade. If it fails, the data will be rollbacked, which means another long time until it is restored. All this, taking time, without any useful information for whoever is doing the upgrade. That person is pretty much wondering: is anything actually happening at the moment??

One might wonder (well, I did at least): how would Microsoft handle this? Because you know – Microsoft is refactoring, and therefor also upgrading the Base App on multiple occasions.

Well – let’s check codeunit 104000 "Upgrade - BaseApp".

Up until v20, you could notice an interesting way of handling updates.

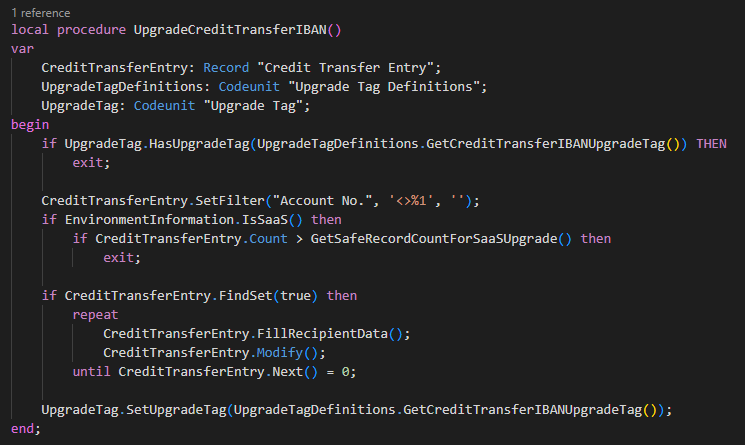

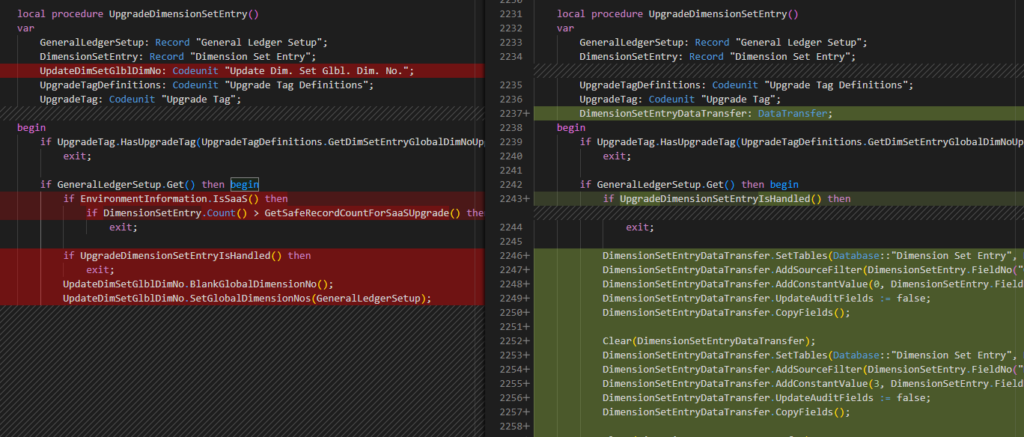

Just take this code into account:

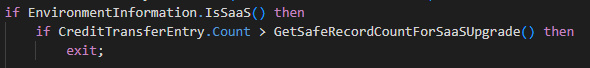

The interesting part is this:

Or in other words:

“If it’s not SaaS, just perform the upgrade. Time out. Fail .. I don’t care.“

And then:

“if there are too many records in the table – don’t upgrade the data (exit). I won’t fail, but you won’t know if I didn’t run either“.

And last but not least:

“we’ll try again in a later upgrade. You probably won’t have less records then, but that’s your problem“

🤔

Obviously I’m a bit sarcastic here ;-), and I can’t shake the feeling that I must be something missing here…

Why is it ok to time out when OnPrem? Or at least, why is it ok to not handle the “too many records” part (and yes, it can be a problem OnPrem as well)? There is NO way for us to hook into those big upgrade routines for OnPrem to handle the upgrade alternatively, so .. how do we upgrade the baseapp in that case? 🤔.

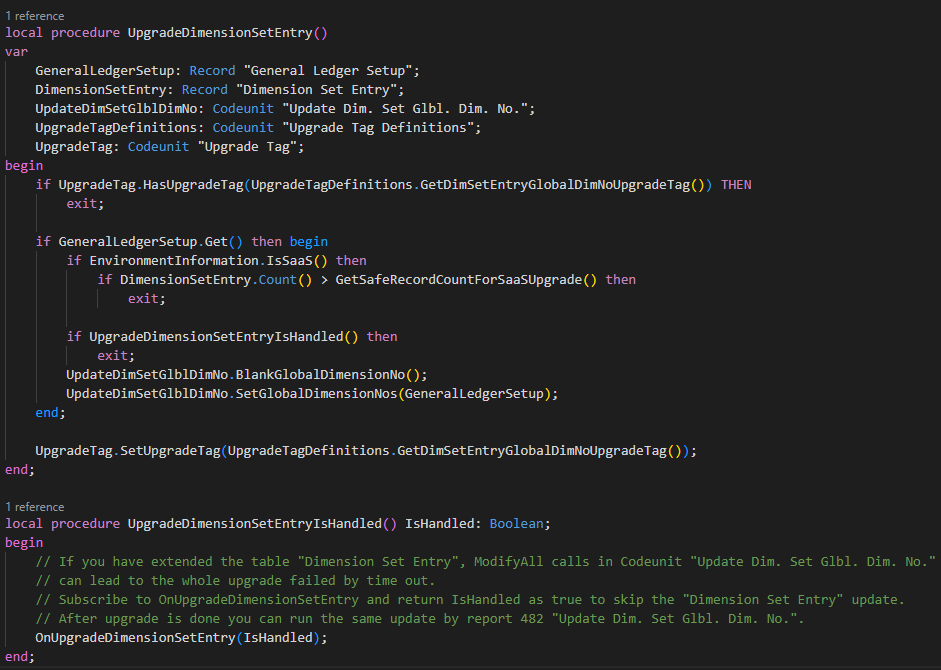

The only thing I could think of, is to manually insert the upgrade tag, and then run the actual upgrade routine ourselves in a newly developed codeunit. For me, that’s a waste of time. I would have expected an event at least, like they did for one of the occasions: UpgradeDimensionSetEntry.

I couldn’t find any alternative to manually upgrade the data in case there were too many records, which would mean, Microsoft would just exit the code, without failing. Honestly, I can’t believe I’m right in this – so please, I you know the alternative way to handle the upgrade, please let me know in the comments down below! 🤔

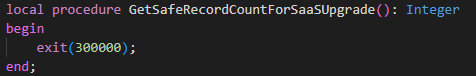

Nevertheless – I could find 6 places in this codeunit with a similar pattern to avoid “too many records”. And by the way – the “golden number” apparently is 300000 records 🤷♂️

The good news is – this was true up until v20. Because since then – we have a new option to speed up upgrades:

The DataTranfer Type

If you don’t know this new ability, I’d have to refer you here. I also mentioned it on my BCTechDays session. Basically, it’s an awesome way to speed up most data-upgrades. Because, while a typical data-upgrade would loop all data, and set values and then call a modify per record – now it can do that in one SQL statement for all records at once, which speeds up the upgrade by 70 times. More performance information on that here on Stephano’s blog.

If you look into the changes in Microsoft’s upgrade codeunit (codeunit 104000 “Upgrade – BaseApp”) from v20 to v21, you can see a lot of usage of the DataTransfer type now. Obviously.

Here’s an example:

It solves so many possible time-outs..

But not all…

Definitely not all. Even within the same codeunit, you still see the same code as above (the UpgradeCreditTransferIBAN procedure), which has not been solved with DataTransfer (simply because it’s not possible), is only checked in SaaS, and will be skipped if over 300000 records, without (again, correct me if I’m wrong) a message/possibility to manually run the upgrade .. . So .. same problems, just not so often anymore.

All I can say – please be aware of this current behaviour – and also be aware of your options when YOU are developing Upgrade Codeunits.

What are my options when I’m creating my own upgrade code?

Well, let me touch on 3 valid options that I’m thinking of.

1. An upgrade codeunit

A normal upgrade codeunit is the most obvious one. And yes, I provided a snippet tupgradecodeunitwaldo in the CRS AL Language Extension for VSCode. I won’t spend too much time on explaining how to create one (it’s pretty much all in the snippet), although do remember the minimum requirements:

- Subtype Upgrade

- Handle Upgrade Tags

- Handle the event “OnGetPerCompanyUpgradeTags”

And again – don’t forget about the DataTransfer possibility. If you can apply this, it makes all the sense in the world!

Here you can find a very simple example: waldo.BCPerfTool/NormalUpgradeWPT.Codeunit.al at main · waldo1001/waldo.BCPerfTool (github.com)

2. A Report

You could also go for a “processing only” report that can be run manually.

The advantage is that the transaction is not part of the upgrade of the app. So the app will install fast .. and the upgrade routine won’t fail the installation of the new version of your app. And the latter obviously can be a disadvantage as well.

Honestly, this way doesn’t really appeal to me, although it can be useful, because:

- Consultants can run chunks of upgrades in multiple runs

- Consultants are able to re-run the routine if necessary

Be aware of the constraints though: You can’t use reports when you want to transfer data from obsoleted objects to new objects. Also, to be able to run DataTransfer types, you need to be in upgrade-mode, which is not possible from reports. Then again – IF I need to move data from obsoleted objects, or IF I can use DataTransfer – I should be using a normal upgrade codeunit anyway.

3. Schedule a Job Queue Entry

Last but not least: why not simply scheduling a job queue entry from the upgrade. That way:

- there is no manual intervention from a consultant needed (like with a report)

- The app installs immediately (no time out)

- The data will upgrade in the background, and will be available asap

Obviously, the same constraint apply: only applicable when you don’t have to read from obsoleted tables (or fields). And, since this is not running during an upgrade, you are not able to use the DataTransfer type either.

How does it work? Well, you need a few components.

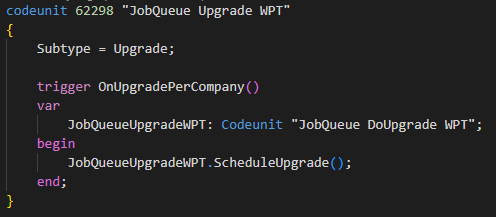

First, you need an upgrade codeunit, because you want to schedule the procedure when upgrading your app. Example:

So the only thing the upgrade-routine will do, is to schedule a codeunit in the Job Queue Entry.

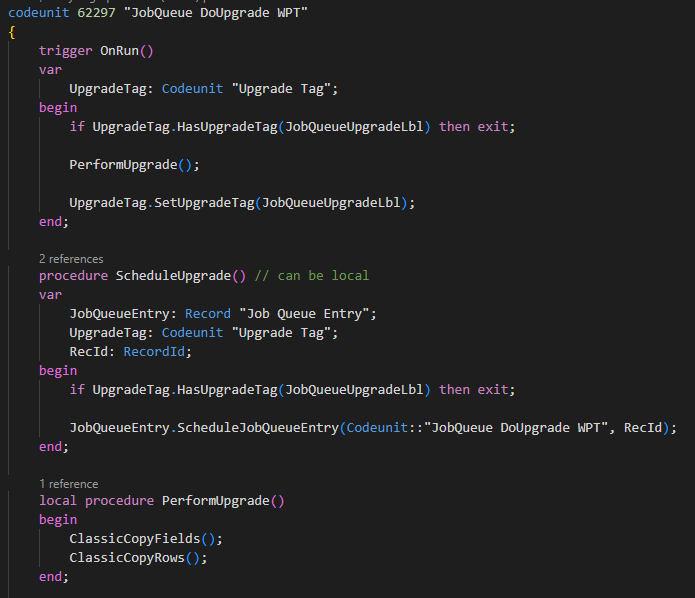

Next, you need a second (normal) codeunit where your code will be run. Because your jobqueue can not “run” an upgrade codeunit, you can’t simply re-use that codeunit 🤷♂️. In this codeunit, you have an OnRun trigger, that executes your upgrade-code.

You can find an example here: waldo.BCPerfTool/BCPerfToolDemos/src/02 Coding/17 Upgrade Code/3. Upgrade with JobQueue at main · waldo1001/waldo.BCPerfTool (github.com)

I just added a snippet for this one in the CRS AL Language Extension as well , which is called “tUpgradeCodeunitJobQueuewaldo“. You’ll see that the snippet generates 2 objects (the two necessary codeunits) in one file. Do know that the intention is to split them in two files. I just wanted to provide the pattern as part of the snippet 🤷♀️. Also, this is just a first version – all comments are always appreciated ;-).

Conclusion

Up until today, I haven’t been involved with upgrade issues like this too much – so I might have things wrong, or there might be better solutions. I’m always eager to hear, so please .. share!

But other than that – upgrade routines are something we really have to pay attention to: both from Microsoft, and our own. Get yourself familiar with “DataTransfer”, always verify that all data has been upgraded (also Microsoft’s) – and make sure your own data upgrade is managed as efficient as possible ;-)! I hope this blogpost helps at least a little bit ;-).

3 comments

3 pings

I expect that MS is handling these “big” data in the upgrade by running “upgrade SQL scripts” directly on the SQL after the upgrade is done. It means they “moved” the upgrade to SQL level. But this is just expectation…

Really helpful post! We tried to postpone the upgrade functionality to be handled by a Job queue entry for the first time. Unfortunaltely this is a bit tricky, to control it with the upgrade tag. On upgrading the extension the job queue entry is created, all other dependent apps are reinstalled, which might have a SetAllUpgradeTags implemented in their install codeunit. So the job queue entry will never be processed. Did anybody else had this struggle?

Ok, solved it 😀 instead of registering the Upgrade Tags in the EventSubscriber OnGetPerCompanyUpgradeTags (which is called published by the SetAllUpgradeTags function), I had to subcribe to OnCompanyInitialize and OnInstallAppPerCompany 🙂

[…] A Business Central “Upgrade Code” saga… […]

[…] A Business Central “Upgrade Code” saga… (waldo.be) […]

[…] A Business Central “Upgrade Code” saga… (waldo.be) […]